Medical Report Information Extraction Pipeline

医疗报告信息提取

Tools: Python, Pandas, Regular Expressions (re), Gender-Guesser, OCR Text Parsing

Final Project

This project focuses on extracting structured metadata from scanned medical reports that have undergone Optical Character Recognition (OCR). The documents span from the 1920s to 1980s and often contain formatting inconsistencies or OCR-related errors. Using rule-based parsing techniques, the system identifies key information such as patient details, diagnoses, medical procedures, and institutional references for use in research contexts.

本项目旨在从经过光学字符识别(OCR)处理的扫描医疗报告中提取结构化元数据。文档年代从 1920 年代至 1980 年代,常存在格式不统一或 OCR 识别错误等问题。系统采用基于规则的文本解析方法,识别并提取包括患者信息、诊断内容、医疗操作及机构相关字段在内的关键数据,用于后续研究分析。

Original Document

Sample Scans (扫描示例)

OCR Output Matching Sample Scan(与左侧扫描对应的 OCR 文本)

FORM NO. 812

YO

The University of Chicago

University Clinics

LABORATORY OF SURGICAL PATHOLOGY.

Path. Diagnosis.

Anal fistula, pyogenic

Name

McEachern, Neil

Surgeon.

Dr. Curtis

Disposal of Tissue:-

Stored

Clinical Diagnosis.

Anal fistula.

No. of Blocks.

Bacteriology-

20

Paraffin

Path. No.

Unit No.

Date.

4 2141 1003

28

02 HQ2

Museum

Destroyed

Celloidin.

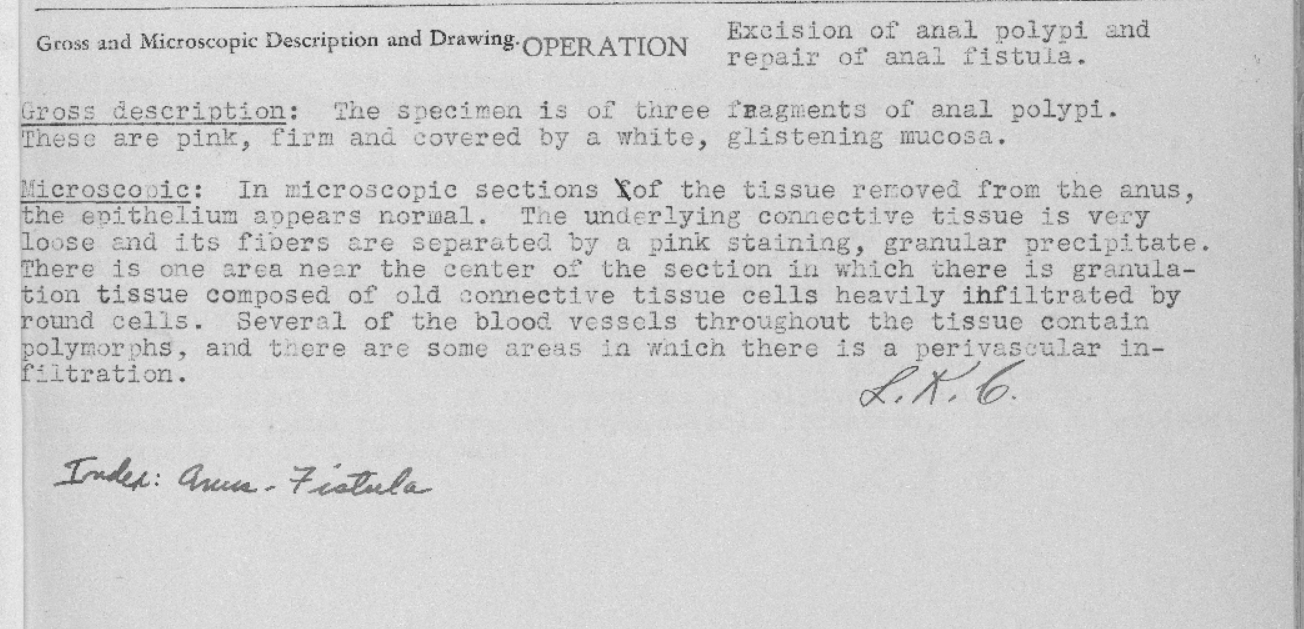

Gross and Microscopic Description and Drawing. OPERATION

Excision of anal polypi and

repair of anal fistula.

Gross description: The specimen is of three fragments of anal polypi.

These are pink, firm and covered by a white, glistening mucosa.

Microscopic: In microscopic sections of the tissue removed from the anus,

the epithelium appears normal. The underlying connective tissue is very

loose and its fibers are separated by a pink staining, granular precipitate.

There is one area near the center of the section in which there is granula-

tion tissue composed of old connective tissue cells heavily infiltrated by

round cells. Several of the blood vessels throughout the tissue contain

polymorphs, and there are some areas in which there is a perivascular in-

filtration.

L.X.C.

Index: aruus- Fistula

Operational Thinking

This work was part of an internship role, where I took ownership of developing a rule-based parsing pipeline to extract structured information from OCR-processed historical medical reports. The dataset consisted of scanned documents dating from the 1920s to the 1980s, with the initial batch coming from the 1920s. These reports were mostly typewritten or handwritten, and the OCR output was often noisy, with issues such as inconsistent formatting, missing fields, and misplaced punctuation.

As a student without a formal computer science background, I independently researched relevant techniques and evaluated possible approaches. Given the lack of annotated data and the instability introduced by OCR errors, I think that NLP or machine learning models were not ideal for this context.

Instead, I designed and implemented a traditional rule-based parsing pipeline to explore whether structured information could be reliably extracted under such noisy and inconsistent conditions. I iteratively refined the pipeline through testing and adjustments based on real-world samples.

The implementation reads OCR text line by line and applies custom extraction functions for each target field (e.g., patient name, age, diagnosis, physician, procedure), using regular expressions and contextual heuristics. Post-processing steps were added to standardize field formats and handle missing values—such as `unit_no`, which may appear on later pages. The structured results are exported to Excel and CSV formats for downstream use.

To facilitate future extension and maintenance, the pipeline was designed in a modular fashion, allowing for adaptation to different document templates across institutions or time periods. The structure also enables potential integration with OCR engines or lightweight classification modules to support an end-to-end workflow from scanned image to structured data.

这项工作是我实习的一部分,我负责开发一个基于规则的解析流程,用于从OCR处理的历史医疗报告中提取结构化信息。该数据集包含20世纪20年代至80年代的扫描文档,其中第一批来自20世纪20年代。这些报告大多是打字或手写的,OCR输出通常存在噪声,例如格式不一致、字段缺失和标点符号错位等问题。尽管没有计算机科学背景,我主动查阅资料并独立研究了相关技术路径,评估了多种可能方案。鉴于缺乏标注数据且 OCR 错误易引发结果不稳定,我判断 NLP 或机器学习模型在该场景下并不理想。作为替代方案,我设计并实现了一个传统的基于规则的解析流程,以探索在这种噪声和不一致的条件下能否可靠地提取结构化信息。我通过基于真实样本的测试和调整,不断完善该流程。该实现逐行读取 OCR 文本,并使用正则表达式和上下文启发式算法,针对每个目标字段(例如,患者姓名、年龄、诊断、医生、诊疗程序)应用自定义提取函数。添加了后处理步骤,以标准化字段格式并处理缺失值(例如“unit_no”,该值可能会出现在后续页面中)。结构化结果将导出为 Excel 和 CSV 格式,以供后续使用。为了方便未来的扩展和维护,该流程采用模块化设计,可适应不同机构或时间段的不同文档模板。该结构还支持与 OCR 引擎或轻量级分类模块集成,从而支持从扫描图像到结构化数据的端到端工作流程。